All Features

Donald J. Wheeler

An engineer once told me, “I work on project teams that have an average half-life of two weeks, implementing solutions with an average half-life of two weeks.” Time after time, and in place after place, our improvement efforts often fall short of expectations and fade away. In this article, I will…

Gleb Tsipursky

The 2024 U.S. presidential election is shaping up to be one of the closest in recent history, with Kamala Harris and Donald Trump locked in a dead heat in many polls. This razor-thin margin amplifies the effect of even small demographic changes, such as those driven by the recent surge in remote…

William A. Levinson

Most quality practitioners are familiar with the Taguchi loss function, which contends that the cost of any deviation from the nominal follows a quadratic model. This is in contrast to the traditional goalpost model, where anything inside the specification limits is good, and anything outside them…

Donald J. Wheeler

The objective of all improvement projects should be to improve the overall process. Everything else should be secondary to this objective. If you improve the efficiency of a support process, or even a portion of the core process, but at the same time lower the efficiency of the overall process,…

Harish Jose

Recently, I wrote about the process capability index and tolerance interval. Here, I’m writing about the relationship between the process capability index and sigma. The sigma number here relates to how many standard deviations the process window can hold.

A +/– 3 sigma contains 99.73% of the…

Donald J. Wheeler

The four common capability and performance indexes collectively contain all of the summary information about process predictability, process conformity, and process aim that can be expressed numerically. As a result, any additional capability measures that your software may provide can only…

Donald J. Wheeler

In May 1924, Walter Shewhart wrote a memo that contained the first example of a process behavior chart (i.e., a “control chart”). It was a chart for individual values that would be known today as a p-chart. Shewhart’s insight was that “three-sigma” limits will filter out virtually all of the…

Scott A. Hindle

Walter A. Shewhart is lauded as the Father of Statistical Process Control (SPC) and is perhaps best remembered for the SPC control chart. The first record of Shewhart’s control chart is found in a Bell Telephone Laboratories internal memo from May 16, 1924, making today the 100th anniversary of…

Donald J. Wheeler

One hundred years ago this month, Walter Shewhart wrote a memo that contained the first process behavior chart. In recognition of this centennial, this column reviews four different applications of the techniques that grew out of that memo.

The first principle for interpreting data is that no data…

Douglas C. Fair, Scott A. Hindle

In less than two months we will celebrate the 100th anniversary of the invention of the control chart, a tool most often associated with statistical process control (SPC). Considering SPC from our modern perspective made us ask, “Is SPC still relevant?”

It’s a question asked within the purview of…

Donald J. Wheeler

When presented with a collection of data from operations or production, many will start their analysis by computing descriptive statistics and fitting a probability model to the data. But before you do this, there’s an easy test that you need to perform.

This test will quantify the chances that…

Donald J. Wheeler

Over the past two months we’ve considered the properties of lognormal and gamma probability models. Both of these families contain the normal distribution as a limit. To complete our survey of widely used probability models, this column will look at Weibull distributions, a family that doesn’t…

Donald J. Wheeler

Clear thinking and simplicity of analysis require concise, clear, and correct notions about probability models and how to use them. Here, we’ll examine the basic properties of the family of gamma and chi-square distributions that play major roles in the development of statistical techniques. An…

Scott A. Hindle, Douglas C. Fair

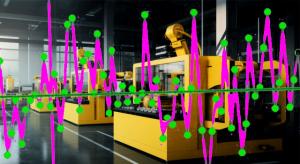

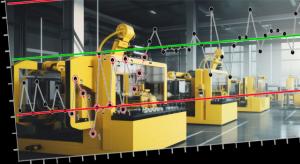

So far in this series our focus has remained on statistical process control (SPC) in manufacturing. We’ve alternated between more traditional uses of SPC that remain relevant in this digital era and discussing uses of SPC and its related techniques that are enabled by the marvels of modern…

Donald J. Wheeler

When do we need to fit a lognormal distribution to our skewed histograms? This article considers the basic properties of the lognormal family of distributions and reveals some interesting and time-saving characteristics that are useful when analyzing data.

The lognormal family of distributions…

Scott A. Hindle, Douglas C. Fair

You are assigned a new task to demonstrate that an existing process will have the capability to meet newer and tighter specifications. The change in specifications for critical-to-quality characteristic P is due to new regulatory requirements; hence, the specifications must be met. The task is…

Douglas C. Fair, Scott A. Hindle

Just a few decades ago, today’s personal technology was a science fiction pipe dream. Powerful computers (smart phones) that fit in our pockets; global positioning satellites for our traveling convenience; and homes where lights, security systems, and locks can be controlled remotely. It’s all just…

Scott A. Hindle, Douglas C. Fair

Parts 1, 2, and 3 of our series on statistical process control (SPC) have shown how data can be thoughtfully used to enable learning and improvement—and consequently, better product quality and lower production costs. Another area of SPC to tap into is that of measurement methods. How do we ensure…

Donald J. Wheeler

Fourteen years ago, I published “Do You Have Leptokurtophobia?” Based on the reaction to that column, the message was needed. In this column, I would like to explain the symptoms of leptokurtophobia and the cure for this pandemic affliction.

Leptokurtosis is a Greek word that literally means “thin…

Douglas C. Fair, Scott A. Hindle

Data overload has become a common malady. Modern data collection technologies and low-cost database storage have motivated companies to collect data on almost everything. The result? Data overload. Unfortunately, few companies leverage the information hidden away in those terabytes of data.

There…

Scott A. Hindle, Douglas C. Fair

We are one year away from the 100th anniversary of the creation of the control chart: Walter Shewhart created the control chart in 1924 as an aid to Western Electric’s manufacturing operations. Since it’s almost prehistoric, is it now time to leave the control chart technique—that started out using…

Donald J. Wheeler

In last month’s column, we looked at how process-hyphen-control algorithms work with a process that is subject to occasional upsets. This column will consider how they work with a well-behaved process.

Last month we saw that process adjustments can reduce variation when they are reacting to real…

Douglas C. Fair, Scott A. Hindle

Today’s manufacturing systems have become more automated, data-driven, and sophisticated than ever before. Visit any modern shop floor and you’ll find a plethora of IT systems, HMIs, PLC data streams, machine controllers, engineering support, and other digital initiatives, all vying to improve…

Donald J. Wheeler

Many articles and some textbooks describe process behavior charts as a manual technique for keeping a process on target. For example, in Norway the words used for SPC (statistical process control) translate as “statistical process steering.” Here, we’ll look at using a process behavior chart to…

Donald J. Wheeler

As we learned last month, the precision to tolerance ratio is a trigonometric function multiplied by a scalar constant. This means that it should never be interpreted as a proportion or percentage. Yet the simple P/T ratio is being used, and misunderstood, all over the world. So how can we properly…