All Features

Leif Nyström

Management by objectives isn’t just a way to set direction for an organization. It’s a prerequisite for creating sustainable development and a culture of continuous improvement.

True success, however, comes not just from setting goals, but from ensuring they are actually achieved. Or, to…

Stephen Russek

In the evenings, after patients have left for the day, our research team visits the radiation oncology offices at the University of Colorado Anschutz Medical Campus to talk to medical physicists about how our research can help cancer patients. We also run experiments in their radiation suites.

The…

George Schuetz

All gauging equipment must be calibrated periodically to ensure it can perform the job for which it’s intended (i.e., measuring parts accurately).

This is true for every hand tool or gauge used in a manufacturing environment that verifies the quality of parts produced—from calipers and micrometers…

Paul Hanaphy

Regular inspection is absolutely vital with industrial transmission systems. Just like the gearbox in an everyday car, components are prone to wear, misalignment, and fatigue—issues that can lead to machinery failure. This isn’t just a matter of downtime but operator safety, too.

Traditionally,…

Akhilesh Gulati

I’ve had this conversation countless times—sometimes with a frustrated client, often with a colleague, and occasionally with my own reflection.

We hear familiar calls for help:• “We need better communication.”• “People need to collaborate more.”• “We’ve lost our culture.”

These observations show…

Adam Grabowski

What’s truly holding your discrete manufacturing shop back from reaching its full potential? It’s often not the commonly cited culprits like labor shortages, razor-thin margins, or fierce competition. It’s more often paper: the unseen, insidious enemy.

Imagine your shop floor: stacks of traveler…

Gleb Tsipursky

The conversation about generative AI (gen AI) is unavoidable in today’s business landscape. It’s disruptive, transformative, and packed with potential—both thrilling and intimidating.

As organizations adopt gen AI to streamline operations, develop products, or enhance customer interactions, the…

Harish Jose

In this article I’m looking at a question that’s rarely asked in management: What if the most responsible course of action isn’t to maximize benefit, but to minimize harm? In decision theory, this is expressed as the minimax principle. The idea is that one should minimize the worst possible outcome…

Felicitas Stuebing

At the corner of quality and assembly, design engineers are frequently confronted with unexpected, complex fluid process issues in the prototyping phase. These obstacles are reflected in voice-of-customer sprints and surveys revealing that medical devices companies in particular stall out in the…

ETQ—Part of Hexagon

Even the smallest manufacturer would never consider using a typewriter to develop an invoice, or manage a sales prospect list from a Rolodex. So why, when it comes to quality management, are they often still using manual methods or home-brewed software that was never intended for today’s quality…

Rick Herman

Amidst uncertainty in manufacturing, AI adoption, labor market fluctuations, and salary disparities across industries and geographic regions, quality professional compensation can be difficult to navigate.

Without current job-level salary benchmarks, quality professionals from technicians to…

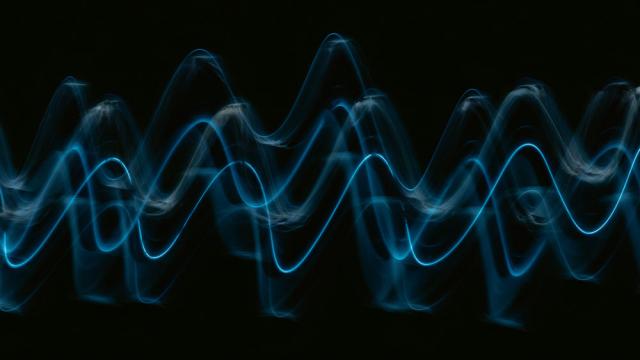

Donald J. Wheeler

One of the principles for understanding data is that while some data contain signals, all data contain noise. Therefore, before you can detect the signals you’ll have to filter out the noise. This act of filtration is the essence of all data analysis techniques. It’s the foundation for our use of…

Mike Figliuolo

Staff meetings can be incredibly productive. Or unproductive—and more often the latter. If your staff meetings are terrible, it’s your fault because you’re not structuring them well.

One of the most surreal business experiences I’ve ever had relates to a staff meeting. I’d joined a new team, and…

Artem Kroupenev

Quality has always been a defining metric in manufacturing when it comes to industry trust, brand longevity, and customer loyalty. Manufacturers are already expected to abide by stringent regulations. But as economic complexity rises and experienced operators retire, maintaining consistent quality…

Bryan Christiansen

From manufacturing and mining to hospitality and healthcare, computerized maintenance management systems (CMMS) have become all but essential. Wherever there are assets to maintain, a CMMS plays a critical role in reducing downtime, controlling costs, and keeping operations running smoothly.

But…

Mat Gilbert, John Robins

Physical AI—the embedding of digital intelligence into physical systems—is a promising but sometimes polarizing technology. Optimists point to the upside of combining AI and physical hardware: robot-assisted disaster zone evacuations, drone deliveries of critical supplies, and driver assistance…

Harish Jose

In this article, I want to explore an idea that often is framed in moral terms but is actually a cybernetic imperative: the necessity of diversity for viable systems. Whether we’re talking about societies, organizations, or even artificial intelligence systems, the principle remains consistent. A…

Erdem Dogukan Yilmaz, Tim Meyer

From the internet and smartphones to 3D printing, recent decades have ushered in general-purpose technology that increases efficiency and collapses the cost of routine tasks. The latest general-purpose technology—you guessed it, generative AI (gen AI)—has the potential to also extend the frontiers…

Robert Turner

Your social media profile headline is nothing more than a phrase on a screen—a concise summary of your skills and expertise. But although that blurb gets you noticed, the real headline for executives is in how they lead.

What story is your leadership telling? As an executive, you know that staying…

ISO

In 2021, container ships idled for weeks outside the Port of Los Angeles, a stark visual reminder of just how fragile modern supply-chain reliability had become. The backlog sent shockwaves across industries. Factories stalled, shelves emptied, and businesses scrambled for alternatives. It was a…

Elizabeth Weddle

The quality systems most medtech teams are stuck with aren’t built for how they work today. 21 CFR Part 820 was authorized by the Federal Food, Drug, and Cosmetic Act of 1978, long before the software industry even existed. And while the regulations themselves aren’t going anywhere, the world they…

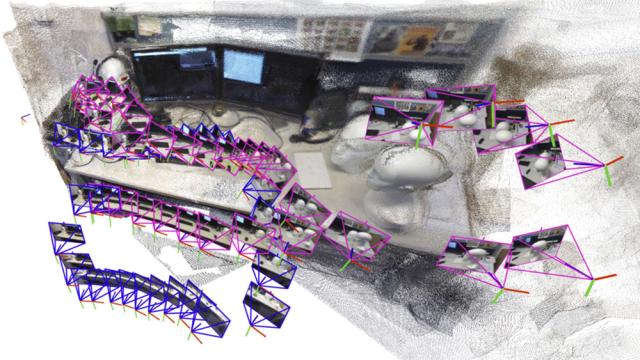

Adam Zewe

A robot searching for workers trapped in a partially collapsed mine shaft must rapidly generate a map of the scene and identify its location within that scene as it navigates the treacherous terrain.

Researchers have recently started building powerful machine-learning models to perform this…

Blake Griffin

T he year 2025 has been rife with uncertainty for industrial automation OEMs and their customers. A cataclysmic shift in U.S. trade policy led to a marketwide mentality of “wait and see.” For automation OEMs, this has manifested broadly as delayed orders from customers rather than outright…

Manfred Kets de Vries

A young manager told me about the day she nearly quit her job. A major restructuring had left her team reeling. As targets shifted overnight, colleagues departed and rumors spread faster than facts. “I felt like I was living in a storm without a compass,” she said.

What changed her mind wasn’t a…

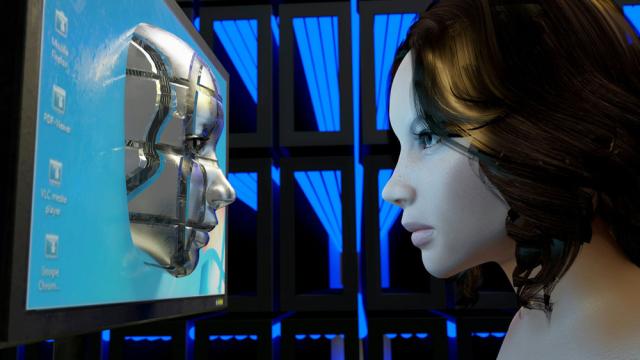

Adam Zewe

What can we learn about human intelligence by studying how machines “think?” Can we better understand ourselves if we better understand the artificial intelligence systems that are becoming a more significant part of our everyday lives?

These questions may be deeply philosophical, but for Phillip…