All Features

Ray Chalmers

All manufacturing companies must manage an ever-growing mountain of priceless inspection data. Yet measurement results, process iterations, and approval reports are scattered across hard drives and USB sticks. We live in a digital world that advances daily, yet obtaining, accessing, sharing, and…

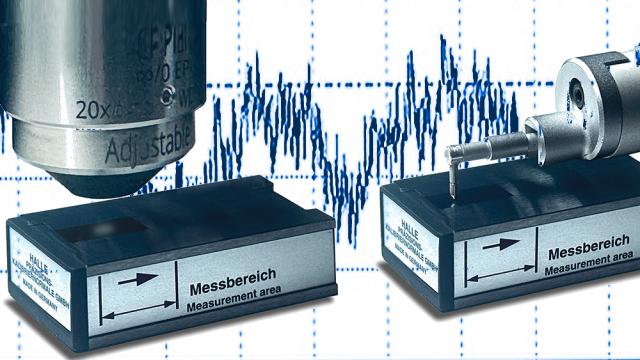

Mike Zecchino, Mark Malburg

Choosing the correct instrument for surface texture measurement can be confusing, given the wide range of options. Stylus-based instruments are the most prevalent in manufacturing. Yet, measuring a surface with a sharp stylus can seem old-fashioned when so many noncontact optical techniques are now…

Jeff Dewar

When we set out to film Episode 2, we faced a fundamental challenge: How do you make people care about errors they can’t see?

(See all the episodes here.)

Error propagation is critical to metrology, the science of measurement, but it’s abstract. These are mistakes measured in tiny amounts that…

Dirk Dusharme

In Episode 1 of The Quality Digest Roadshow, we talked about metrology standards and how those standards and traceability are the glue that holds industry together. While measurement standards are critical, they’re useless without the equipment, processes, and people that use the tools that measure…

Stephen Russek

In the evenings, after patients have left for the day, our research team visits the radiation oncology offices at the University of Colorado Anschutz Medical Campus to talk to medical physicists about how our research can help cancer patients. We also run experiments in their radiation suites.

The…

George Schuetz

All gauging equipment must be calibrated periodically to ensure it can perform the job for which it’s intended (i.e., measuring parts accurately).

This is true for every hand tool or gauge used in a manufacturing environment that verifies the quality of parts produced—from calipers and micrometers…

Paul Hanaphy

Regular inspection is absolutely vital with industrial transmission systems. Just like the gearbox in an everyday car, components are prone to wear, misalignment, and fatigue—issues that can lead to machinery failure. This isn’t just a matter of downtime but operator safety, too.

Traditionally,…

Felicitas Stuebing

At the corner of quality and assembly, design engineers are frequently confronted with unexpected, complex fluid process issues in the prototyping phase. These obstacles are reflected in voice-of-customer sprints and surveys revealing that medical devices companies in particular stall out in the…

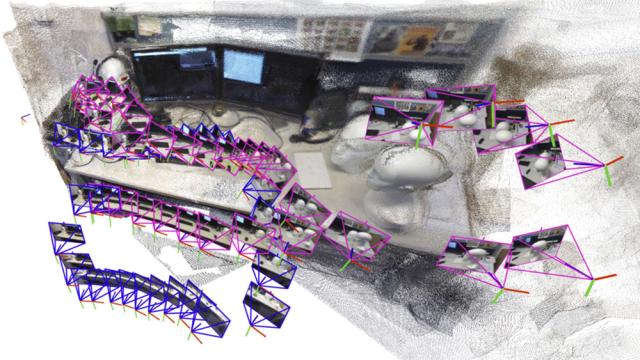

Adam Zewe

A robot searching for workers trapped in a partially collapsed mine shaft must rapidly generate a map of the scene and identify its location within that scene as it navigates the treacherous terrain.

Researchers have recently started building powerful machine-learning models to perform this…

Creaform

Your company works hard to bring quality products to market, but your current inefficient development process slows you down. Your engineers rely on traditional tools like measuring tapes, calipers, verniers, or photos to gather dimensions and document how to shorten time to market and lower…

Nimax

The global coding- and marking-equipment market is on a clear growth path. As shown in a recent Grand View Research report, the market was worth $17,528 million worldwide by the end of 2024.

Furthermore, GVR’s projections estimate the market value will reach $24,927 million by 2030, with a…

Curtis Lynn

I’ve worked in manufacturing procurement for just over 25 years. In that time, I’ve learned one thing above all else—precision is the backbone of quality. Every product we make, every part we produce, and every component we measure relies on measuring tool accuracy. If measurements are off, quality…

Paul Hanaphy

When components leak, sizing them up for repairs can be extremely difficult. This isn’t just due to distance and locale—many are underground or underwater—but also safety issues. If components carry hazardous substances, manual measurement is inherently riskier than noncontact alternatives.…

Jeff Dewar

I’m thrilled to announce something we’ve been working on for a year and a half—a project that took us 30,000 miles across America and into the heart of industries that most people never see. On Nov. 12, 2025, Quality Digest will premiere the first episode of The Quality Digest Roadshow, a 12-…

Dirk Dusharme

Literally, everything that surrounds us has been measured—and I do mean literally. Look around you: Your desk, your chair, your pen, your pencil, the lead in the pencil, the paint on the pencil, the gas in your stove, the stove itself—it’s all been measured. The color of your orange juice, the…

Creaform

In motorsport, performance isn’t defined by a single factor. It’s the sum of countless details, each playing a decisive role when pushing speeds up to 200 mph (320 km/h). From how a driver sits in the car to how the bodywork complies with strict regulations, accuracy can mean the difference between…

Rajas Sukthankar

Simply put, we live in a digital world—both in our personal lives and on the job. In manufacturing, challenges abound. Customization, fast-changing business and technology environments, and workforce and talent-pool concerns combine to present challenges for manufacturers of all types.

Among…

Alex de Vigan

D uring the past decade, manufacturers have wired their plants with sensors, robots, and software. Yet many “AI-driven” systems still miss the mark. They analyze numbers but fail to understand the physical reality behind them: the parts, spaces, and movements that make up production itself.

“…

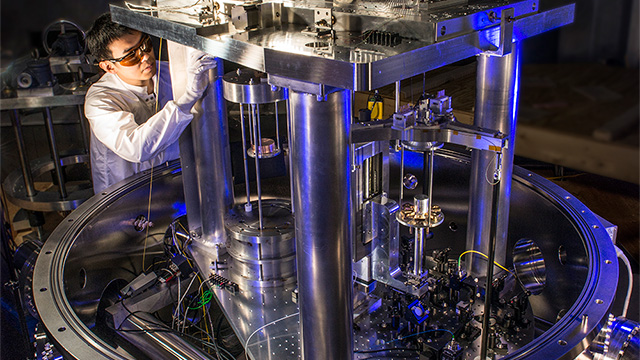

Andrew Iams

I grew up outside Pittsburgh, widely known as “Steel City.” Although the city is no longer the center of steel or heavy manufacturing in America, its past remains a proud part of its identity.

Like many Pittsburghers, my family’s story is tied to this industrial legacy. My relatives immigrated…

George Schuetz

Digital calipers are one of the most common hand tools used on the shop floor. In a manufacturing plant, under a quality control system, these tools must be checked and calibrated regularly.

Past articles have discussed the pros and cons of doing gauge calibrations internally or by an external…

David Mihal

Quality Digest was recently fortunate enough to get more information on Geomagic Design X for reverse engineering from David Mihal, global commercial director of the Geomagic software product line within Hexagon’s Manufacturing Intelligence division. The newly available software converts data from…

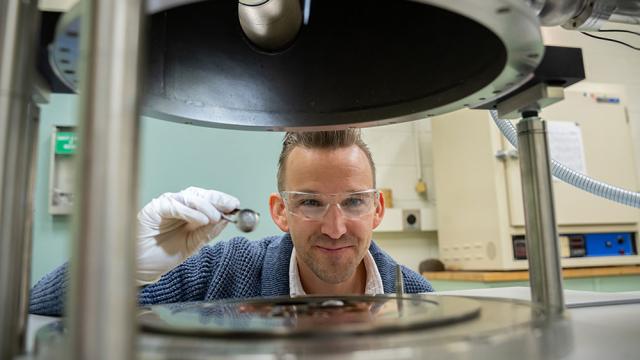

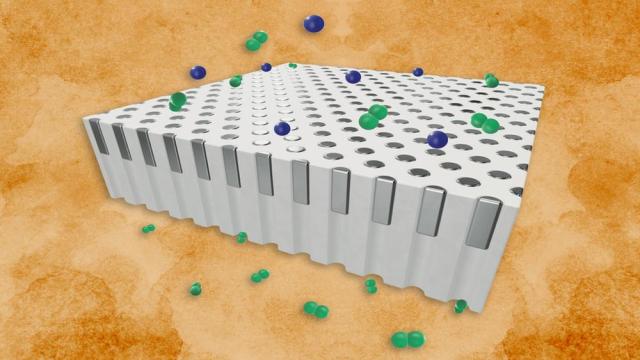

Jennifer Chu

Palladium is a key to jumpstarting a hydrogen-based energy economy. The silvery metal acts as a natural gatekeeper against every gas except hydrogen, which it readily allows through. For its exceptional selectivity, palladium is considered one of the most effective materials for filtering gas…

Silke von Gemmingen

Powder bed-based laser melting of metals (PBF-LB/M) is a key technology in additive manufacturing that makes it possible to produce highly complex and high-performance metal components with customized material and functional properties. Used in numerous industries from aerospace and medical…

Dennis Wylie

You’ve probably had the experience of visiting a contemporary factory floor and being amazed by all the incredible robots, sensors, and machines working like a finely choreographed dance. It’s quite remarkable—until there’s a malfunction. And that’s something which has frustrated quality engineers…

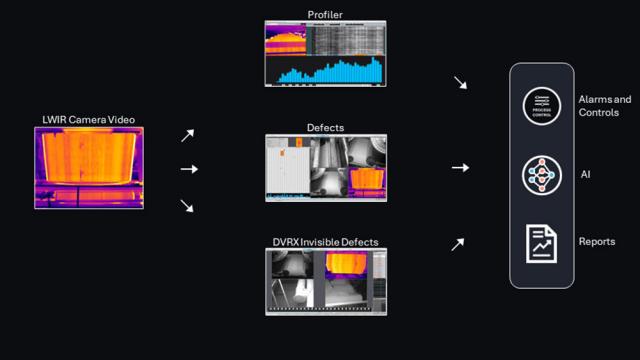

FLIR

Recent developments in thermal signature analytics have expanded the applications of thermal cameras beyond routine troubleshooting; they now contribute to paper machine control, energy usage benchmarking, wet streak detection, and the identification and prediction of certain classes of sheet…