Content by Rip Stauffer

Wed, 04/12/2023 - 00:03

A couple of years ago, my wife decided to surprise me by taking me over to our local Tesla dealership so I could test drive a Tesla. We put a deposit down to hold our place in line, and two months later took delivery of a Model Y Performance. I…

Tue, 09/18/2018 - 12:00

I must admit, right up front, that this is not a totally unbiased review. I first became aware of Davis Balestracci in 1998, when I received the American Society for Quality (ASQ) Statistics Division Special Publication, Data “Sanity”: Statistical…

Wed, 06/06/2018 - 12:03

A lot of people in my classes struggle with conditional probability. Don’t feel alone, though. A lot of people get this (and simple probability, for that matter) wrong. If you read Innumeracy by John Allen Paulos (Hill and Wang, 1989), or The Power…

Mon, 10/09/2017 - 12:03

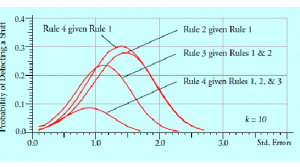

How do extra detection rules work to increase the sensitivity of a process behavior chart? What types of signals do they detect? Which detection rules should be used, and when should they be used in practice? For the answers read on.

In 1931, … Ranges vs. Standard Deviations: Which Way Should You Go?A look at the mathematical difference between X-bar R and X-bar S

Tue, 06/02/2015 - 13:04

Recently, in one of the many online discussion groups about quality, Six Sigma, and lean, this question was posed: “Can X-bar R and X-bar S be used interchangeably based on samples size (n) if the subgroup size is greater than one and less than… Is Six Sigma Dead?Postmortem vs. prescription

Thu, 12/04/2014 - 15:42

A number of recent articles in quality literature (and in the quality blogosphere) have posited the death or failure of Six Sigma. More articles, from many of the same sources, discuss the outstanding success of current Six Sigma efforts in… Render Unto Enumerative Studies…Data from a time series can’t be ‘mixed rigorously’ and then analyzed

Wed, 07/31/2013 - 12:22

In one recent online forum, a Six Sigma Black Belt asked a question about validating samples—how to ensure that when they are taken, they would reflect (i.e., represent) the population parameter. His purpose: to understand the baseline for a… Drop the Argument, Channel the Value StreamWhy we need to effect change in systems

Fri, 09/28/2012 - 13:36

Editor’s note: In response to Kyle Toppazzini’s article, “Lean Without Six Sigma May Be a Failing Proposition,” published in the Sept. 27, 2012, issue of Quality Digest Daily, Rip Stauffer left the following observant comment.

I started my career… Your World Is Not Red or Green Your dashboards shouldn’t be, either

Mon, 06/18/2012 - 10:30

I recently closed the doors of my own consulting company on the prairie in Minnesota and headed back into the wild, wacky, wonderful world of larger consulting groups, joining a group in Northern Virginia. One of the consequences of that transition… Some Problems with Attribute ChartsWelcome to the wild, wacky, wonderful world of attributes charts!

Thu, 04/01/2010 - 10:27

It’s better to measure things when we can; that’s been well-established in the quality literature over the years. The use of go/no-go gauges will always provide much less information for improvement than measuring the pieces themselves. However, we…