All Features

James Gaines, Knowable Magazine

In 2004, the United States’ Defense Advanced Research Projects Agency (DARPA) dangled a $1 million prize for any group that could design an autonomous car that could drive itself through 142 miles of rough terrain from Barstow, California, to Primm, Nevada. Thirteen years later, the U.S. Department…

Jon Speer

Medical device product development and risk management are often treated as entirely separate processes. Sure, there is usually acknowledgement and understanding that these two processes are related. But it is important to realize that product development and risk management share more than that.…

Dirk Dusharme

Every company wants to succeed, but not all can say they meet the current requirements to do that. More than a focus on capital, business plans, or staff, a successful business in 2022 must operate digitally. Yet for the 45 percent of small and medium-sized businesses (SMBs) that still rely on…

Laurie Flynn

AStanford Medicine-led study has found that borrowing certain billing- and insurance-related procedures from other countries could lead to policies that drastically lower healthcare costs in the U.S.

The new study, published in the August edition of Health Affairs, compares costs of healthcare…

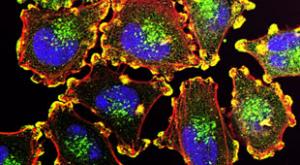

Bendta Schroeder

The first step in choosing the appropriate treatment for a cancer patient is to identify their specific type of cancer, including determining the primary site: the organ or part of the body where the cancer begins.

In rare cases, the cancer’s origin can’t be determined, even with extensive testing…

Dwayne Duncum

The workplace has changed forever, having gone through a revolution similar to the Industrial Revolution. Our workplaces are diverse, complex, and frequently changing. If we take any lesson from the Covid pandemic, it’s that the way we work, where we work, and how we work have fundamentally shifted…

Etienne Nichols

I know what you’re thinking. You’ve got a medical device prototype that the FDA has categorized as Class I. You’re ready to push forward to manufacturing or marketing the device, since there are no formal requirements for design controls. “So why would I waste time on design controls?”

The fact is…

Catherine Barzler

Falls are a serious public health issue that result in tens of thousands of deaths annually while racking up billions of dollars in healthcare costs. Although there has been extensive research into the biomechanics of falls, most current approaches study how the legs, joints, and muscles act…

Jennifer Chu

Ultrasound imaging is a safe and noninvasive window into the body’s workings, providing clinicians with live images of a patient’s internal organs. To capture these images, trained technicians manipulate ultrasound wands and probes to direct sound waves into the body. These waves reflect back out…

Grant Ramaley

The FDA Quality System Regulation (QSR) 21 CFR Part 820 was written in 1997 to harmonize with ISO 13485:1996. The goal was to relieve some of the burden of manufacturers having to meet two different criteria, the FDA’s and ISO 13485.

But by 2003, ISO 13485 had changed so significantly that the FDA…

Claudine Mangen

Work has become an around-the-clock activity, courtesy of the pandemic and technology that makes us reachable anytime, anywhere. Throw in expectations to deliver fast and create faster, and it becomes hard to take a step back.

Not surprising, many of us are feeling burned out. Burnout—which often…

Gregory Way

Drugs don’t always behave exactly as expected. While researchers may develop a drug to perform one specific function that may be tailored to work for a specific genetic profile, sometimes the drug might perform several other functions outside of its intended purpose.

This concept of drugs having…

Adam Zewe

Physicians often query a patient’s electronic health record for information that helps them make treatment decisions, but the cumbersome nature of these records hampers the process. Research has shown that even when a doctor has been trained to use an electronic health record (EHR), finding an…

Karina Montoya

Close to 9 million people in India suffer from hepatitis C. If left untreated, the virus leads to cirrhosis or liver damage, which eventually causes death from organ failure or cancer. On average, a 50-year-old man in India with asymptomatic liver damage who doesn’t receive treatment is expected to…

Tom Rish

Your design history file (DHF) is one of the most critical components of your QMS. That’s because the DHF should contain all the product development documentation for a specific medical device. Its purpose is to show regulatory bodies and internal stakeholders that you appropriately followed the…

Patricia Santos-Serrao

The pharmaceutical industry has seen significant upheaval and disruption during the past several years. These changes are due in part to the impacts of Covid—for example, interruptions in the supply chain and overwhelming market demand for shortened production times.

They are also being driven by…

Mike John

This article has been republished with permission from Medical Plastics News.

While ISO 13485 sets the standard for quality management systems (QMS) in medical device manufacturing, metrology is often treated as an afterthought and used simply to validate products and detect defects at the end of…

Gleb Tsipursky

The pandemic has made organizations aware of the need for a new C-suite leader, the CHO, or chief health officer. This has been driven by recognizing the importance of employee health for engagement, productivity, and risk management, along with lowering healthcare insurance costs. At the same time…

Ann Brady

Safer food, better health: This was the theme of World Food Safety Day (June 7, 2022), and it’s obvious, is it not, that access to safe food is vital for life and health? The challenge in today’s world is how to achieve this. Global food systems, already under pressure before the pandemic, are now…

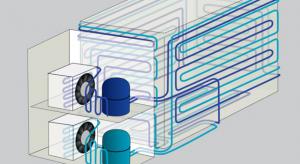

Jamie Steiner

Ultra-low temperature freezers became popular due to the storage of Covid-19 vaccines, but they have been important components of laboratories for many years. There’s a lot, however, to think about—quality, productivity, maintenance, different types of technology, warranties, etc. And if you end up…

Prashant Yadav

During the past two and a half years, we’ve seen unparalleled innovation and private-public collaboration in the global fight against Covid-19. The rapid development and rollout of new vaccines, diagnostic tests, and therapeutics have saved millions of lives.

However, these developments haven’t…

David Stevens

The United States has more than 6,000 hospitals, and each one has thousands, if not tens of thousands, of clinical assets, such as imaging machines, ventilators, and IV pumps. Managing this equipment becomes a mighty task when hospital staff must handle the monitoring, repair, and maintenance of…

Kari Miller

Quality management is essential to the growth and performance of any organization. It’s a valuable resource in the effort to ensure that products and services satisfy the highest quality requirements and deliver positive customer results.

Pharmaceutical manufacturers must ensure that the…

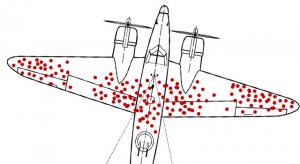

William A. Levinson

Quality-related data collection is useful, but statistics can also deliver misleading and even dysfunctional results when incomplete. This is often the case when information is collected only from surviving people or products, extremely satisfied or dissatisfied customers, or propagators of bad…

The Un-Comfort Zone With Robert Wilson

In a recent column, I wrote about the power of suggestion. I stated, “When our subconscious mind is exposed to a constantly repeated message, it’s going to penetrate unless we are cognizant of it.” Becoming conscious of indoctrinating media messages is important, but recognizing your own internal…