The requirement for risk-based thinking is among the most significant changes in ISO 9001:2015. Army Techniques Publication (ATP) 5-19, Risk Management is a public domain reference that supports this requirement.

|

ADVERTISEMENT |

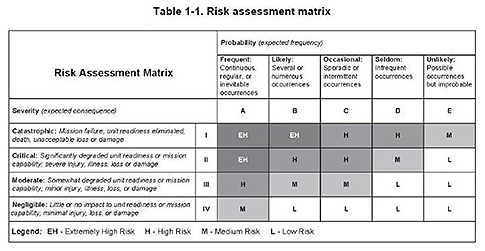

ATP 5-19 includes a risk assessment approach that is similar to failure mode and effects analysis (FMEA), but is considerably simpler. As seen in the table in figure 1, it uses only four severity and five occurrence (probability) ratings, and generates one of four hazard levels as the counterpart to the FMEA’s risk priority number (RPN).

Figure 1: ATP 5-19 risk assessment matrix. Click here for larger image.

…

Add new comment