In most healthcare settings, workers attend weekly, monthly, or quarterly meetings where performances are reported, analyzed, and compared to goals in an effort to identify trends. Reports often consist of month-to-month comparisons with “thumbs up” and “thumbs down” icons in the margins, as well as the alleged trend of the past three months or the current month, previous month, and 12 months ago.

|

ADVERTISEMENT |

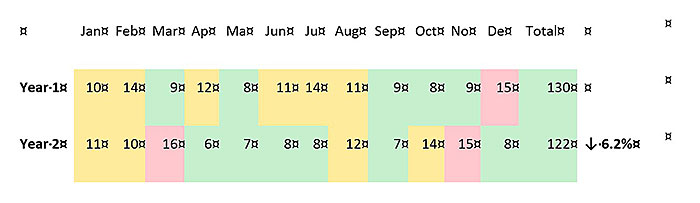

The data below are typical of the types of performance data that leadership might discuss at a quarterly review, in this case, a year-end review. Suppose these are healthcare data on a key safety index indicator—for instance, some combination of complaints, patient falls, medication errors, pressure sores, and infections. The goal is to have fewer than 10 events monthly (less than 120 annually). In line with the craze of “traffic light” performance reporting, colors are assigned as follows:

• Less than 10 = green

• 10–14 = yellow

• 15 or higher = red

Year-end review performance data

|

|

…

Comments

A better way?

Hi, Davis,

Your article centres on a “better way” – plot the data in time order, run charts, control charts … – in the use and review of data while providing a basis for action from the data.

In how many places do you see this “better way” successfully in operation?

Where yes, what do you think is/are the key ingredient/s to make it happen?

Where not, what do you think is a main obstacle?

My two cents

I don't know what Davis's answers will be, but I would imagine they would run close to mine:

1. In not nearly enough places, especially outside of manufacturing operations

2. Key ingredients to make it happen: Constancy of purpose, leaders who understand variation (and, hopefully, the rest of the System of Profound Knowledge), and want to KNOW what their processes will do, instead of guessing...

3. Obstacles: The system for educating and training managers (that does not, for the most part, teach them these things). Clinging to MBO, carrot-and-stick, firefighting paradigms for management.

Add new comment