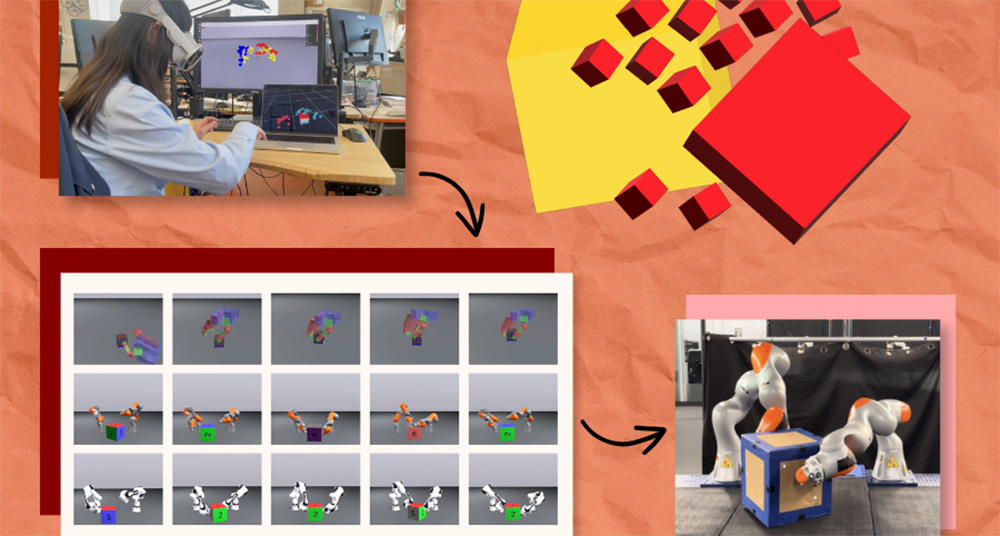

Image designed by Alex Shipps/MIT CSAIL using photos from the researchers.

PhysicsGen can multiply a few dozen virtual reality demonstrations into nearly 3,000 simulations per machine for mechanical companions like robotic arms and hands.

When ChatGPT or Gemini gives you what seems to be an expert response to your burning questions, you may not realize how much information they rely on to give that reply. Like other popular generative artificial intelligence (AI) models, these chatbots rely on backbone systems called foundation models that train on billions, or even trillions, of data points.

|

ADVERTISEMENT |

In a similar vein, engineers are hoping to build foundation models that train a range of robots on new skills like picking up, moving, and putting down objects in places like homes and factories. The problem is that it’s difficult to collect and transfer instructional data across robotic systems. You could teach your system by teleoperating the hardware step-by-step using technology like virtual reality (VR), but that can be time-consuming. Training on videos from the internet is less instructive, since the clips don’t provide a step-by-step, specialized task walk-through for particular robots.

…

Add new comment