Wharton

When artificial intelligence burst into mainstream business consciousness, the narrative was compelling: Intelligent machines would handle routine tasks, freeing humans for higher-level creative and strategic work. McKinsey research sized the long-term AI opportunity at $4.4 trillion in added productivity growth potential from corporate use cases, with the underlying assumption that automation would elevate human workers to more valuable roles.

|

ADVERTISEMENT |

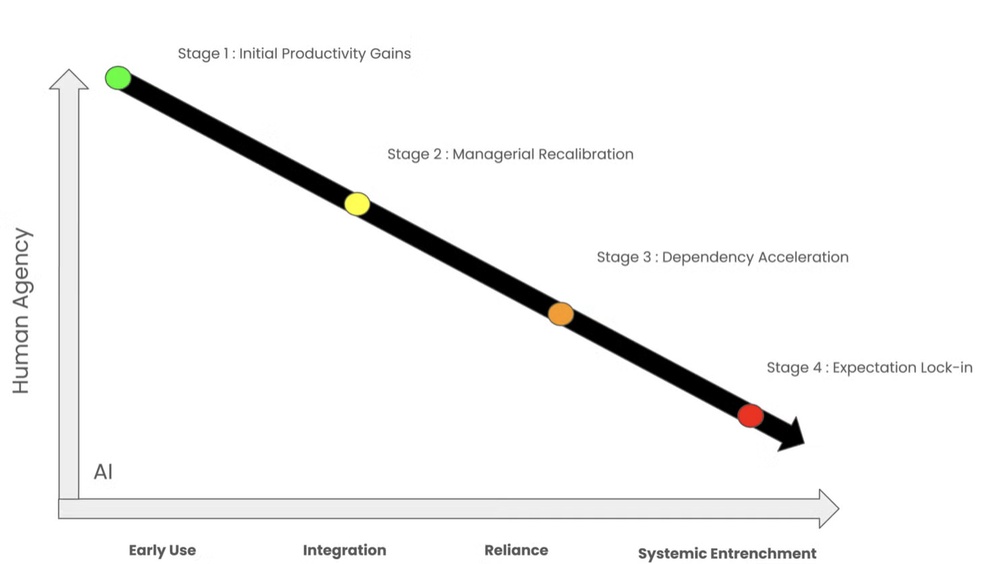

Yet, something unexpected has emerged from the widespread adoption of AI. Three-quarters of surveyed workers were using AI in the workplace in 2024, but instead of experiencing liberation, many found themselves caught in an efficiency trap—a mechanism that only moves toward ever-higher performance standards.

…

Add new comment