On the face of it, it seems to be impossible for skewed variables to add up to a normally distributed result. Yet both common experience and mathematical theory combine to show us that this does indeed happen. In fact it is a fundamental property of probability theory which, in turn, explains the robustness of the process behavior chart.

|

ADVERTISEMENT |

What happens when we add variables?

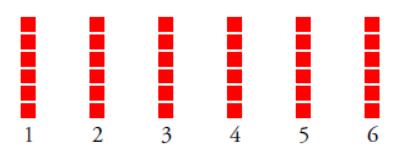

Consider a pair of dice used in games of chance. Each die has six faces with each face showing from one to six spots. If the die is a fair die, we expect each face will turn up approximately the same number of times in any series of repeated rolls. Here we say that each outcome between one and six is equally likely and expect any histogram to look something like figure 1.

Figure 1: Outcomes for the roll of one die

…

Comments

Dr Wheeler article

Another fabulous article full of precious knowledge and insight. Thank you Don!

Don Wheelers Article on non normal data

Don. This was an excellent article on how to handle skewness in your data. The law of averages using larger sample sizes really show the difference in Normality. Rhank You Don

Add new comment