During my recent travels speaking at conferences and consulting, root cause analysis (RCA) seems to have taken on a life of its own and is now a well-established subindustry in any organization, regardless of its chosen approach to improvement.

|

ADVERTISEMENT |

There are many things that “shouldn’t” happen. Why not consider such incidents as undesirable variation and get back to basics? One of Deming’s principles was that there are two kinds of variation—common cause and special cause—and that treating one as the other makes things worse.

And the human tendency is to treat virtually all variation as special cause—of which RCA is another example.

Has anyone considered whether things that “shouldn’t” happen might be common cause—as in, one’s organization is “perfectly designed” to have them occur? What might be the effect of multiple RCAs in such cases?

‘Because we worked so hard!’

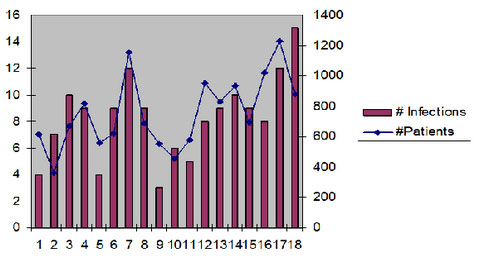

I was at a conference where one of the poster sessions proudly declared that they had reduced infections in a pediatric unit. It used the following display:

…

Comments

Bias for Action

“The human tendency is an expectation of quickly getting to the bottom of things, getting the forms filled out, fixing the problem identified, and getting back to work." Amen.

I am reminded that those who get ahead have a "bias for action". I once had a boss who was Director of Engineering for a large corporation. We shared many interests, but not in terms of method to get results. He was fast, I was slow. We finally seemed to hit it off when I told him he was an empiricist and I was a rationalist. That was, in the course of a year, he and his staff would try a 100 things. I would study a 100 things and try 10 of them I thought best. So the question between us was who would produce a better list of successful outcomes. Don't think we ever drew a conclusion, but our relationship improved considerably.

I had another boss whose typical action in response to a customer problem was to immediately convene a relevant group, go around the table listing probable causes, and then get the group to rank order the most likely causes. Finally he would solicit courses of action to "solve" the most likely problem and again get the group to rank order the "best" actions. At this point, usually less than an hour into the meeting, he would return to his office and call the customer to say we were on our way to a solution. The customers seemed to love this speed, even if the results were off the mark for lack of serious thought and investigation.

Regards,

Bill Pound, PhD

Sitting at a table listing causes

Your comment regarding your boss describes what I think is the biggest problem in root cause analysis. Empirical data (and looking at the failure) seems to be ignored in most RCA literature and discussions on the topic.

Would you mind if I quote your comment in conference talks and potentially in an article? Normally, I would just quote and use proper references, but I would rather not quote or paraphrase a comment without permission.

Matt Barsalou

Root Cause or System Design Flaws?

First I think this is a matter of semantics, but since we use words to communicate semantics matters. In my world (and in the intent of Root Cause Analysis) a 'root cause' IS the system design flaw in a physics based problem or a people process based problem. (I actually prefer the term causal mechanism or causal system as it is rare that there is a single simple factor that causes a Problem) It is difficult to redesign a system, process or product to prevent a Problem if we don't know exactly what is causing the Problem.

Secondly, I do agree that too often the teaching and use of RCA relys on opinion, fishbone diagrams and multi-colored voting dots to arrive at the 'cause'. Effective root cause analysis relies on data, solid investigative practices and objective evidence that a factor or set of factors actually cause the Probelm. The use of opinion is fake RCA, or maybe we should call it Zombie RCA.

Effective Solution Development is a whole other topic...

Add new comment