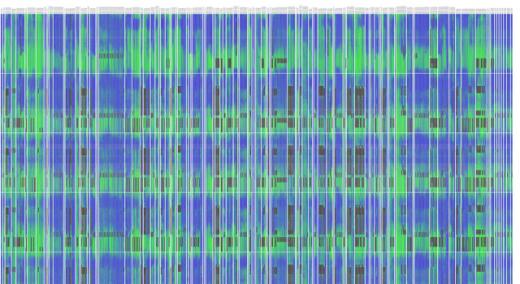

As a quality professional, you’ve probably heard the famous quote from W. Edwards Deming, “In God we trust; all others bring data.” Thanks to technological advancements in our industry, data exist more abundantly than ever. This presents a new challenge for those tasked with extracting and communicating useful knowledge from data.

|

ADVERTISEMENT |

Arguably, Deming’s intent was actually for all others to bring knowledge because data, alone, lacks context and interpretation. Knowledge, instead, is the outcome of reliable exploratory practices against your data, which, when followed, allow insights and opportunities to emerge. Problems with data can block access to this knowledge. Here are five common problems you may encounter in this data-intense world.

…

Comments

Bringing Data

I understand what you mean about bringing knowledge, but I took Deming's quote to mean bring data to support your assertion. This in some, or many, cases is a precursor to knowledge; perhaps an idea or hypothesis. The data provides context for the idea. Loose example, if I think climate change isn't real, but I dont know (lack of knowledge) the data I may provide for discussion/assessment may be that temperatures are remaining constant and weather patterns have stabilized.

This is not meant to be an argument. I'm just sharing a thought with hopes of discussion or feedback. All that said, excellent article. And no, the data/knowledge question is not all I took away. I'm a fan of your work with Quality Digest in general.

Add new comment