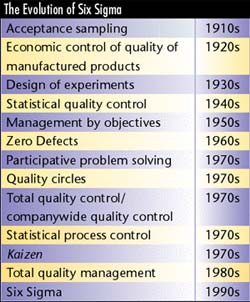

The management-system approach to quality, described in my previous article (August 2001) evolved from standards for certain

regulated industries and led to the widespread acceptance of ISO 9000 and its industry-specific derivatives. A second approach--which we will focus on in this column--stresses quantitative,

analytical methods and techniques. This approach started with statistical measures to monitor production processes in cases where 100-percent inspection or testing would be impractical or

prohibitively expensive. It culminated in the current Six Sigma movement.  During the 1920s, researchers at Bell Telephone Laboratories began to apply statistical methods to the control of quality. Foremost among them were Walter A. Shewhart (who

developed the control chart in a memo dated May 16, 1924, and who taught both Joseph M. Juran and W. Edwards Deming), and Harold Dodge and Harry Romig, who jointly

developed acceptance sampling plans in 1928 to use inspection data for control. During the 1920s, researchers at Bell Telephone Laboratories began to apply statistical methods to the control of quality. Foremost among them were Walter A. Shewhart (who

developed the control chart in a memo dated May 16, 1924, and who taught both Joseph M. Juran and W. Edwards Deming), and Harold Dodge and Harry Romig, who jointly

developed acceptance sampling plans in 1928 to use inspection data for control.

The concept of "six sigma" as a desired performance level began with

acceptance sampling. Dodge and Romig defined "lot quality" as analogous to "unit quality." In place

of a tolerance on each unit, they proposed a "lot tolerance in percent defective" and designed sampling plans to ensure the likely rejection of lots outside this tolerance.

Implicit in these plans were nominal values called acceptable quality levels. When confronted with a lot, the inspector must decide whether to screen the lot

beforehand or to allow the defectives to be discovered as the lot is used. The latter is more expensive per defective unit found, but if the number of such units is small,

it will cost less than inspecting every unit in the lot. The AQL was intended to be the point below which discovery in use was less expensive than prior screening.

This was widely misunderstood afterward to mean that some defectives were acceptable. Shewhart described the concepts of process capability and control charts in his

book Economic Control of Quality of Manufactured Product (Van Nostrand Co. Inc., 1931), which emphasized focusing on the customer (translating wants into

physical characteristics) and minimizing process variation. To Shewhart, the joint purpose of process-capability studies and control charts was identifying the current

capability level and reducing variation and maintaining tighter control of the aim of the process. Unfortunately, for many companies, process capability is a static

experience--measure what the current capability is and record it--rather than a dynamic methodology for reducing variation and controlling the aim of the process.

Later, World War II spawned a push for quality. In the 1940s, members of the Western Electric team were brought together by the War Department (now the

Department of Defense) to enhance the quality and productivity of items needed for the war effort. This group, which met at Columbia University (and was later

referred to as the Columbia Research Group), created documents such as MIL-STD-105 (sampling procedures and tables for inspection by attributes) and

MIL-STD-414 (sampling procedures and tables for inspection by variables for percent defective). These documents were designed to make scientific sampling

easier to apply for the manufacturers of war supplies but later became standard tools. At first, the War Department had difficulty getting manufacturers to accept

sampling. Manufacturers were concerned about potential type-1 errors--rejecting lots that were good. They believed that

the military might take a small sample and, based on one or two defects, reject an entire lot. The Columbia Research Group redesigned the Dodge-Romig

tables--which were based on LTPD--and substituted an acceptable quality level, ensuring that lots with defect percentages at least equal to the AQL would have a

low probability of being rejected. The end result was that many lots with defect percentages between the AQL and the LTPD were accepted.

After the war, all types of commercial goods were in great demand, and SQC was set aside (except by the military and NASA). It didn't matter if the product was

good or bad; the pent-up demand was so great that it would be sold. In the early 1960s, SQC reemerged, and efforts were put in place to use these

tools more effectively. By the late 1960s, however, management by objectives and Zero Defects were the reigning fads, and SQC remained on the back burner once

again. The early 1970s and the reemergence of Deming and Juran (via Japan) brought quality circles and the renewed application of basic problem solving and

SQC tools. The 1980s and 1990s brought TQM and Six Sigma, both of which reemphasized quantitative analytical tools. But we'll discuss those in the next article in this series. About the author Stanley A. Marash, Ph.D., is chairman and CEO of STAT-A-MATRIX Inc. E-mail smarash@qualitydigest.com . Fusion Management is a trademark of STAT-A-MATRIX Inc. ©2001 STAT-A-MATRIX Inc. All rights reserved. |